Privacy und Technischer Datenschutz

- Typ: Seminar

- Lehrstuhl: KIT-Fakultäten - KIT-Fakultät für Informatik - KASTEL – Institut für Informationssicherheit und Verlässlichkeit - KASTEL Strufe

- Semester: Sommer 2023

-

Dozent:

Prof. Dr. Thorsten Strufe

M.Sc. Markus Raiber

M.Sc. Patricia Guerra-Balboa - SWS: 2

- LVNr.: 2400087

| Inhalt |

Das Seminar behandelt aktuelle Themen aus dem Forschungsgebiet des technischen Datenschutzes. Dazu gehören z.B.:

|

| Vortragssprache | Englisch |

| Termine |

18.4.2023, 14:00 - 15:30 Uhr, Geb. 50.34 Raum 252, Introduction, organization, and topics 23.4.2023 Topic preferences due 24.4.2023 Topic assignment 25.4.2023, 14:00 - 15:30 Uhr, Geb. 50.34 Raum 252, Basic Skills lesson 25.6.2023 Paper submission deadline 02.7.2023 Reviews due 09.7.2023 Revision deadline 17.7.2023 Presentations |

Topics

#1 Distributed Key Generation

Supervisor: Christoph Coijanovic

Distributed Key Generation (DKG) [1-3] allows a set of n participants to collaboratively generate a key pair. The resulting public key represents all participants. Importantly, each participant holds only a portion of the secret; the entire secret can only be reconstructed if all participants cooperate. DKG is used in many building blocks of anonymous communication, such as threshold signature schemes and distributed randomness beacons [1].

The goal of this seminar topic is to survey existing approaches to distributed key generation and to categorize them, e.g., based on underlying assumptions, overhead, and additional functionality.

References

[1] Komlo, Chelsea et al. "A Formal Treatment of Distributed Key Generation, and New Constructions." IACR Cryptol. ePrint Arch. (2023).

[2] Das, Sourav et al. "Practical Asynchronous Distributed Key Generation." IEEE Symposium on Security and Privacy (SP) (2022).

[3] Gurkan, Kobi et al. "Aggregatable Distributed Key Generation." IACR Cryptol. ePrint Arch. (2021).

#2 Analyzing Riot Dynamics

Supervisor: Daniel Schadt

Local device-to-device communication is useful in situations in which access to central internet infrastructure is limited, either because it's overloaded, destroyed by natural disasters or purposefully deactivated by a malicious party. An ad-hoc mesh network can then be used to relay messages from device to device, providing means to communicate e.g. during a protest that the government wants to shut down.

To build and evaluate such an ad-hoc system, it is important to understand the behavior of the participating people, in our case with a focus on protests. This includes for example the sizes and durations of such protests and the movement of the participants and the group. The goal of this seminar is to get an overview over the dynamics in a protest situation, both from theoretical models (e.g. social force), as well as from real world examples (e.g. Hong Kong).

References

- Lemos, Carlos et al. "Agent-Based modeling of protests and violent confrontation: a micro-situational, multi-player, contextual rule-based approach" (ResearchGate)

- Helbing, Dirk et al. "Social force model for pedestrian dynamics" (arXiv)

- https://github.com/srl-freiburg/pedsim_ros

- https://magazine.atavist.com/do-you-hear-the-people-sing-hong-kong-democracy-protests-china/

#3 A Survey of MANET Communication Approaches

Supervisor: Daniel Schadt

Even though device-to-device communication networks are useful if central infrastructure is unavailable, building such a network is challenging: It has to deal with special restrictions such as churn, the lack of a central authority, changing connections, unreliable transport, and more. There are a few suggestions for protocols that enable routing in such meshes, but they all have their own requirements, advantages and disadvantages.

This seminar is about writing a survey to classify existing solutions and compare them in performance, anonymity, setup requirements, and reliability.

References

- Zhang, Yanchao et al. "MASK: anonymous on-demand routing in mobile ad hoc networks" (Semantic Scholar)

- Hong, J. et al. "ANODR: anonymous on demand routing with untraceable routes for mobile ad-hoc networks" (Semantic Scholar)

- AL-Dhief, Fahad Taha et al. "MANET Routing Protocols Evaluation: AODV, DSR and DSDV Perspective" (PDF)

#4 A survey on privacy of ubiquitous EMR receivers

Supervisor: Julian Todt

Nowadays, devices that measure electro-magnetic radiation (EMR) are ubiquitous in everyday life (antennas, cameras, etc.). While it is common knowledge that visible light cameras can be used to identify individuals, there has also been increasing research in whether other EMR receivers can be used for privacy inferences. The goal of this seminar topic is to survey existing work analyzing the privacy impacts of common EMR receivers. Technologies to consider include but are not limited to thermal imaging [0], depth imaging (LiDAR) [1], Radar [2], WLAN [3] and 5G (+beamforming).

References

[0] Anghelone, David, Cunjian Chen, Arun Ross, and Antitza Dantcheva. "Beyond the Visible: A Survey on Cross-Spectral Face Recognition." arXiv, May 5, 2022. https://arxiv.org/abs/2201.04435.

[1] A. Wu, W. -S. Zheng and J. -H. Lai, "Robust Depth-Based Person Re-Identification," in IEEE Transactions on Image Processing, vol. 26, no. 6, pp. 2588-2603, June 2017, doi: 10.1109/TIP.2017.2675201.

[2] P. Zhao et al., "mID: Tracking and Identifying People with Millimeter Wave Radar," 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini, Greece, 2019, pp. 33-40, doi: 10.1109/DCOSS.2019.00028.

[3] Y. Zeng, P. H. Pathak and P. Mohapatra, "WiWho: WiFi-Based Person Identification in Smart Spaces," 2016 15th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), 2016, pp. 1-12, doi: 10.1109/IPSN.2016.7460727.

#5 A Relationship Between Syntactic and Semantic Privacy

Supervisor: Àlex Miranda-Pascual

There are two well-known families of privacy notions: syntactic and semantic. Syntactic notions specify conditions that an anonymized database should satisfy, while semantic notions describe guarantees that anonymization mechanisms should satisfy. Although the difference between them is clear, there is a relationship [1] between two important representatives of each: t-closeness (syntactic) and differential privacy (semantic). The goal of this seminar topic is to explore these two families by specifically understanding how we can guarantee t-closeness from differential privacy and vice versa, and what implications this relationship might have.

References

[1] J. Domingo-Ferrer, J. Soria-Comas (2015) "From t-closeness to differential privacy and vice versa in data anonymization". https://arxiv.org/abs/1512.05110

[2] J. Domingo-Ferrer, J. Soria-Comas (2018). "Connecting randomized response, post-randomization, differential privacy and t-closeness via deniability and permutation". arXiv preprint arXiv:1803.02139.

The following papers give an overview of differential privacy and t-closeness:

[3] C. Dwork, A. Roth (2014). "The Algorithmic Foundations of Differential Privacy." Found. Trends Theor. Comput. Science, pp. 211–407, Online at: https://www.cis.upenn.edu/~aaroth/Papers/privacybook.pdf.

[4] N. Li, T. Li and S. Venkatasubramanian, "t-Closeness: Privacy Beyond k-Anonymity and l-Diversity," 2007 IEEE 23rd International Conference on Data Engineering, 2007, pp. 106–115, doi: 10.1109/ICDE.2007.367856.

#6 Differentially Private Outlier Detection

Supervisor: Àlex Miranda-Pascual

Outliers are data instances that are distant from the rest of the instances in a database. Detecting outliers is an important task that remains necessary in fields such as management, medicine, and security. However, outliers can be sensitive and difficult to anonymize and protect. In this seminar topic, we are interested in studying outlier detection with differentially private guarantees, a well-known privacy notion. The main goal is to review the literature and develop a survey that can help to understand why private outlier detection is needed, what are the different ways to detect outliers, and what are the requirements and applications for each of the reviewed proposals.

References

- Degue, Kwassi H., Karthik Gopalakrishnan, Max Z. Li, and Hamsa Balakrishnan. "Differentially Private Outlier Detection in Correlated Data". In 2021 60th IEEE Conference on Decision and Control (CDC), 2735–42, 2021. https://doi.org/10.1109/CDC45484.2021.9683118.

- Okada, Rina, Kazuto Fukuchi, Kazuya Kakizaki, and Jun Sakuma. "Differentially Private Analysis of Outliers". arXiv, 26 July 2015. https://doi.org/10.48550/arXiv.1507.06763.

- Asif, Hafiz, Periklis A. Papakonstantinou and Jaideep Vaidya, "A Guide for Private Outlier Analysis," in IEEE Letters of the Computer Society, vol. 3, no. 1, pp. 29–33, 1 Jan.–June 2020, doi: 10.1109/LOCS.2020.2994342.

- Du, Min, Ruoxi Jia, and Dawn Song. "Robust anomaly detection and backdoor attack detection via differential privacy." arXiv preprint arXiv:1911.07116 (2019).

#7 An Introduction to Differentially Private Stochastic Gradient Descent

Supervisor: Àlex Miranda-Pascual

Stochastic gradient descent (SGD) is an iterative optimization algorithm for finding the parameters that provide the best fit between predicted and actual outputs, and is widely used in many machine learning models. Private learning has become a popular topic that has seen an increase in recent years, and in particular has introduced a differential private (DP) counterpart to SGD, called DP-SGD. The goal of this seminar topic is to develop a tutorial covering DP-SGD and other DP optimization algorithms for machine learning. The tutorial should cover how optimization algorithms generally can be made differentially private, what DP optimization algorithms exist and and what composition theorems are used to ensure DP.

References

- Abadi, Martin, Andy Chu, Ian Goodfellow, H. Brendan McMahan, Ilya Mironov, Kunal Talwar, and Li Zhang. "Deep Learning with Differential Privacy". In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, 308–18. CCS '16. New York, NY, USA: Association for Computing Machinery, 2016. https://doi.org/10.1145/2976749.2978318.

- Chaudhuri, Kamalika, Claire Monteleoni, and Anand D. Sarwate. "Differentially Private Empirical Risk Minimization". The Journal of Machine Learning Research 12, no. null (July 1, 2011): 1069–1109.

- Shokri, Reza, and Vitaly Shmatikov. "Privacy-Preserving Deep Learning". In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, 1310–21. CCS '15. Denver, Colorado, USA: Association for Computing Machinery, 2015. https://doi.org/10.1145/2810103.2813687.

- Song, Shuang, Kamalika Chaudhuri, and Anand D. Sarwate. "Stochastic Gradient Descent with Differentially Private Updates." In 2013 IEEE Global Conference on Signal and Information Processing, 245–48, 2013. https://doi.org/10.1109/GlobalSIP.2013.6736861.

- Bassily, Raef, Adam Smith, and Abhradeep Thakurta. "Private Empirical Risk Minimization: Efficient Algorithms and Tight Error Bounds." In 2014 IEEE 55th Annual Symposium on Foundations of Computer Science, 464–73, 2014. https://doi.org/10.1109/FOCS.2014.56.

- Papernot, Nicolas, Shuang Song, Ilya Mironov, Ananth Raghunathan, Kunal Talwar, and Ulfar Erlingsson. "Scalable Private Learning with PATE," 2018. https://openreview.net/forum?id=rkZB1XbRZ.

#8 Permission Modelling for Mixed Reality

Supervisor: Simon Hanisch

Sharing sensor data with applications on mobile devices today is dominated by a permission model. Applications request access to specific sensors and users have the choice to either allow or deny. However, with the evolution towards Mixed Reality (MR) applications, this model is under attack as most MR applications require access to most sensors even for their basic functionality. Therefore, this seminar will explore how the permission model for mobile devices can be adapted for MR devices. The aim is to provide an overview of how permission systems can be extended to provide more privacy and allow users to make more informed decisions about what information they want to share.

References

- FlowFence: Practical Data Protection for Emerging IoT Application Frameworks

- ContexIoT: Towards Providing Contextual Integrity to Appified IoT Platforms

- PABAU: Privacy Analysis of Biometric API Usage

- Apex: extending Android permission model and enforcement with user-defined runtime constraints

- Enabling Fine-Grained Permissions for Augmented Reality Applications with Recognizers

#9 Correlation framework in DP

Supervisor: Patricia Guerra-Balboa

Differential Privacy (DP) has become the formal and de facto mathematical standard for privacy-preserving data release. However, recently several articles demonstrated various shortcomings of this notion. Strong correlation in data is one example. DP inherently assumes the database is a simple, independent random sample. This implies that the records of the database are uniformly distributed (i.e., follow the same probability distribution) and independent (in particular, non-correlated). Unfortunately, this is not the case for several data and use cases, such as trajectory data. The goal of this project is first to understand and formalize why DP fails in the correlation framework. Second, to explore the new adaptations of DP to deal with correlation, particularly Bayesian DP, analyzing the privacy protection offered by this notion and the mechanisms to achieve it.

Topics: Privacy Foundations • Differential Privacy • Correlation • Privacy Analysis

Background References

- Basic knowledge about DP:

- Dwork, C., Roth, A., et al. (2014). The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9 (3–4), 211–407.

- Kasiviswanathan, S. P., & Smith, A. (2014). On the 'semantics' of differential privacy: A bayesian formulation. Journal of Privacy and Confidentiality, 6 (1).

- Statisic Analysis:

- Panaretos, V. M. (2016). Statistics for mathematicians. Compact Textbook in Mathematics. Birkhäuser/Springer, 142, 9–15.

- Sylvan, D. (2013). Introduction to mathematical statistics: by rv hogg, j. mckean, and at craig, boston, ma: Pearson, 2012, isbn 978-0-321-795434, x+ 694 pp., 110.67. Taylor & Francis Group. Retrieved from https://minerva.it.manchester.ac.uk/~saralees/statbook2.pdf

References

- Wang, H., Xu, Z., Jia, S., Xia, Y., & Zhang, X. (2021). Why current differential privacy schemes are inapplicable for correlated data publishing? World Wide Web, 24 (1), 1–23.

- Zhang, T., Zhu, T., Liu, R., & Zhou, W. (2022). Correlated data in differential privacy: definition and analysis. Concurrency and Computation: Practice and Experience, 34 (16), e6015.

- Yang, B., Sato, I., & Nakagawa, H. (2015). Bayesian differential privacy on correlated data. In Proceedings of the 2015 acm sigmod international conference on management of data (pp. 747–762).

Advanced References

- Chakrabarti, D., Gao, J., Saraf, A., Schoenebeck, G., & Yu, F.-Y. (2022). Optimal local bayesian differential privacy over markov chains. In Aamas (pp. 1563–1565).

- Cao, Y., Yoshikawa, M., Xiao, Y., & Xiong, L. (2018). Quantifying differential privacy in continuous data release under temporal correlations. IEEE transactions on knowledge and data engineering, 31 (7), 1281–1295.

- Rafiei, M., Elkoumy, G., & van der Aalst, W. M. (2022). Quantifying temporal privacy leakage in continuous event data publishing. arXiv preprint arXiv:2208.01886.

#10 Topology-based privacy notions in graph data

Supervisor: Patricia Guerra-Balboa

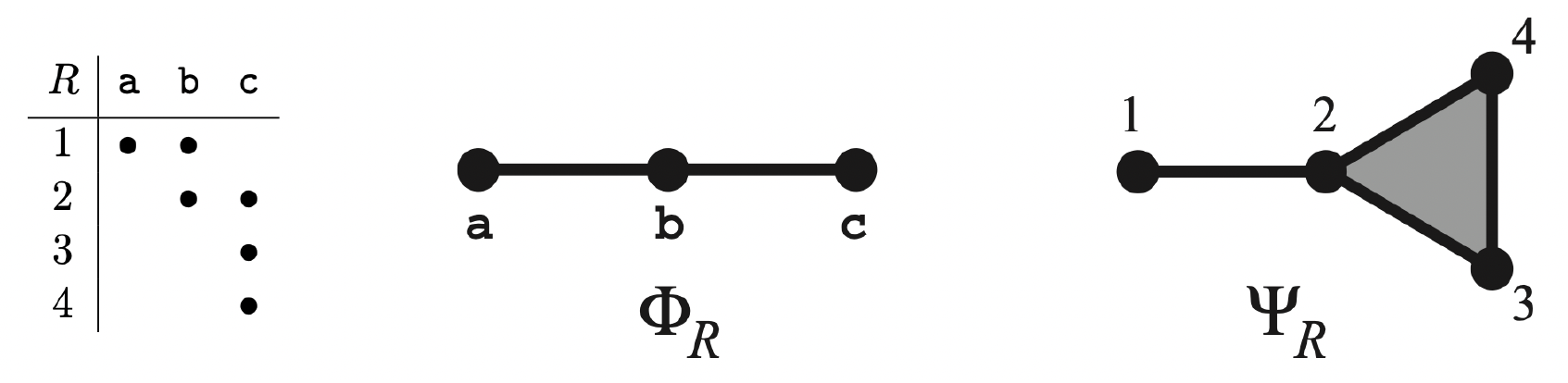

Information has intrinsic geometric and topological structures, arising from relative relationships beyond absolute values or types. For instance, the fact that two people did or did not share a meal describes a relationship independent of the meal’s ingredients. Multiple such relationships give rise to relations and their lattices. Lattices have topology. That topology informs the ways in which information may be observed, hidden, inferred, and dissembled. Privacy preservation may be understood as finding isotropic topologies, in which relations appear homogeneous. Moreover, the underlying lattice structure of those topologies has a temporal aspect, which reveals how isotropy may contract over time, thereby puncturing privacy. Erdmann exploit the topology of relations in databases to define two new syntactic privacy notions: Attribute and Association privacy.

The goal of this project is to understand privacy from the topological perspective using Dowker complexes. Particularly, we will focus on association privacy, and try to find the interpretation and application of this privacy metric in graph data.

Advanced goal: Once we understand how to measure privacy preservation and loss in graphs using topology we could go further explore the relation between these notions and the differential privacy notions defined for graphs. If the time and motivation of the student allow this we could also overview some topology-based privacy-enhancing techniques for graph data.

Topics: Topology • Data structure • Privacy of relations

References

Erdmann, M. (2017). Topology of privacy: Lattice structures and information bubbles for inference and obfuscation. arXiv preprint arXiv:1712.04130.

#11 Correlation-based attacks in time series

Supervisor: Patricia Guerra-Balboa

Possible attacks and their efficiency are a fundamental part of cybersecurity to know what privacy risks we face and what level of practical security our anonymization algorithms represent. A particular field of attacks exists based on the correlation of data. Exploding public or external knowledge the attacker creates correlation models that help to de-anonymize the data and obtain a database closer to the original one. Typical examples are the use of social networks to infer individual attributes or physics models of autocorrelation in trajectory data.

The objective of this project is the understanding, analysis and, if time permits, the design of new attacks based on exploiting the data correlation, such as the well-known filtering attacks. The specific focus of our attacks will be on time series data.

Advanced goal: Propose ideas for new attacks.

Topics: Attacks • Probability • Practical Privacy Analysis

References

- Li, Y., Ren, X., Yang, S., & Yang, X. (n.d.). Impact of Prior Knowledge and Data Correlation on Privacy Leakage: A Unified Analysis. , 14 (9), 2342–2357. DOI: 10.1109/TIFS.2019.2895970

- Cao, Y., Yoshikawa, M., Xiao, Y., & Xiong, L. (2018). Quantifying differential privacy in continuous data release under temporal correlations. IEEE transactions on knowledge and data engineering, 31 (7), 1281–1295.

- Wang, H., Xu, Z., Jia, S., Xia, Y., & Zhang, X. (n.d.). Why current differential privacy schemes are inapplicable for correlated data publishing? , 24 (1), 1–23. Retrieved 2023-03-31, from https://link.springer.com/10.1007/s11280-020-00825-8 DOI: 10.1007/s11280-020-00825-8

#12 Neighbouring Quantum States in QDP

Supervisor: Shima Hassanpour

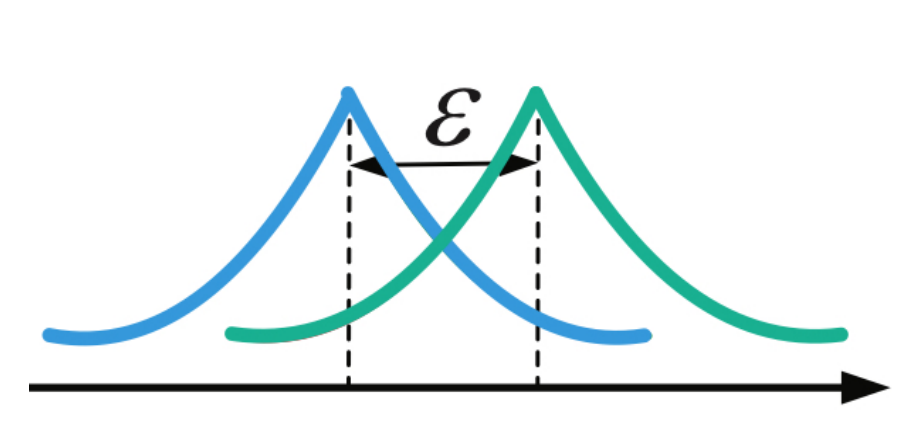

Differential privacy has been extended to quantum computing. One of the main challenges in translating the definition of DP into the quantum setting is to characterise the notion of neighbouring quantum states. Thus, understanding the relationship between these notions is of both theoretical and practical interest.

References

- Zhou, Li, and Mingsheng Ying. "Differential privacy in quantum computation." In 2017 IEEE 30th Computer Security Foundations Symposium (CSF), pp. 249–262. IEEE, 2017.

- Aaronson, Scott, and Guy N. Rothblum. "Gentle measurement of quantum states and differential privacy." In Proceedings of the 51st Annual ACM SIGACT Symposium on Theory of Computing, pp. 322–333. 2019.

- De Palma, Giacomo, Milad Marvian, Dario Trevisan, and Seth Lloyd. "The quantum Wasserstein distance of order 1." IEEE Transactions on Information Theory 67, no. 10 (2021): 6627–6643.

#13 Private Set Intersection

Supervisor: Shima Hassanpour

Private Set Intersection (PSI) is one of the most important privacy issues. The aim of this seminar topic is to survey existing quantum approaches that have been proposed to solve the PSI problem.

References

- Amoretti, Michele. "Private Set Intersection with Delegated Blind Quantum Computing." In 2021 IEEE Global Communications Conference (GLOBECOM), pp. 1–6. IEEE, 2021.

- Shi, Run-Hua, and Yi-Fei Li. "Quantum private set intersection cardinality protocol with application to privacy-preserving condition query." IEEE Transactions on Circuits and Systems I: Regular Papers 69, no. 6 (2022): 2399–2411.

#14 Speech Processing in the Brain

Supervisor: Matin Fallahi

This seminar will focus on speech processing in the brain. Participants will read research papers on this topic and write a short paper summarizing their findings. The seminar will cover the underlying neural mechanisms involved in language processing in the brain. By analyzing primary research papers, participants will develop critical thinking skills and gain a deeper understanding of the latest advancements in the field of cognitive neuroscience. The main question would be how recorded brain activity (MEG, EEG, fMRI, etc.) can be modeled during language-based tasks (reading/listening/speaking).

References

- Millet J, Caucheteux C, Boubenec Y, Gramfort A, Dunbar E, Pallier C, King JR. Toward a realistic model of speech processing in the brain with self-supervised learning. Advances in Neural Information Processing Systems. 2022 Dec 6;35:33428–43.

- Caucheteux C, Gramfort A, King JR. Evidence of a predictive coding hierarchy in the human brain listening to speech. Nature Human Behaviour. 2023 Mar 2:1–2.

#15 Robust Subgroup Multi-Signatures for Consensus

Supervisor: Saskia Bayreuther

Multi-signatures are used to prove that a given subgroup of n parties have all signed a message. Recently, multi-signatures are applied to consensus protocols. A consensus protocol is a decentralized protocol where n parties have the goal to agree on one output value where each party has one input value. Typically, a malicious adversary is assumend, corrupting a subset of parties. Traditional applications of multi-signatures however assume that every party in the set participates in every multi-signature computation and is honest. This cannot be assumed in consensus protocols. Therefore, this paper [1] proposes a new multi-signaute variant called robust subgroup multi-signatures, where any subgroup of signers can produce a multi-signature on behalf of the group even in the presence of a malicious adversary.

References

[1] Galindo, David, and Jia Liu. "Robust Subgroup Multi-signatures for Consensus." Cryptographers’ Track at the RSA Conference. Springer, Cham, 2022. (Springer)